Design for Activism

Design for Activism Lab @ Border Sessions

By Nina Nout

On June 13, we had the pleasure of hosting our Design For Activism lab at the Border Sessions Festival. The goal of the lab was to design a workflow that creates opportunities for tech experts and companies to support human rights activists online. In other words, we wanted to find out how we can engage tech companies long term in order to establish and maintain a sustainable cooperation between human rights defenders and tech companies. In this lab, we also wanted to introduce people to the issues human rights defenders face online, from harassment and arbitrary banning to threats of violence.

We kicked off our lab by getting to know two human rights defenders from Rwanda and Pakistan. Their stories gave us insights into the complicated dynamics of online activism. Technology has been an incredibly powerful tool for them to communicate with others and raise awareness of human rights violations, but it has also exposed them to threats and harassment.

Karen van Luttervelt from We Are listens attentively to the stories of our human rights defenders.

Online harassment is a worrying trend, as a study conducted in the United States by Pew Research Center (2017) shows. According to Pew, 66% of adult internet users have seen online harassment and 41% have personally experienced it [1]. Among human rights defenders this number may be even higher, as they stand up for the rights of minority groups and victims, resist State and extremists attempts to silence them, and hold existing power structures accountable. Unfortunately, big tech companies have not yet made adequate steps to address and prevent online harassment. An Amnesty International report on online violence against women on Twitter explains: “The company’s failure to meet its responsibilities regarding violence and abuse means that many women are no longer able to express themselves freely on the platform without fear of violence or abuse.” [2].

During the Border Sessions Lab, we used the case of Twitter’s policy on online harassment to demonstrate the power imbalance that human rights defenders’ experiences. We addressed the lived experiences of one of our human rights defenders in detail during a role-play simulation. We divided the group into three smaller groups: human rights defenders, State actors, and Twitter, to discuss how Twitter currently handles online disputes between individuals and the government. This was followed by a discussion about how Twitter could additionally support human rights defenders on their platform. The main question that seemed to form during the discussion was how to translate freedom of expression to an online environment. This showed itself to be a multi-faceted problem. On the one hand Twitter gives human rights defenders a platform to voice their opinions. On the other, the platform enables people to harass and intimidate human rights defenders, as well as spread false information.

The exercise was eye-opening. As we moved further along in the discussion, our participants learned that tech companies frequently use freedom of expression as an excuse not to take action. We also discussed the technological aspects while retaining social understanding of the problem. Another interesting finding was that the human right defenders’ group was overshadowed by the arguments of Twitter and the government. A situation that is also so often the case in real life.

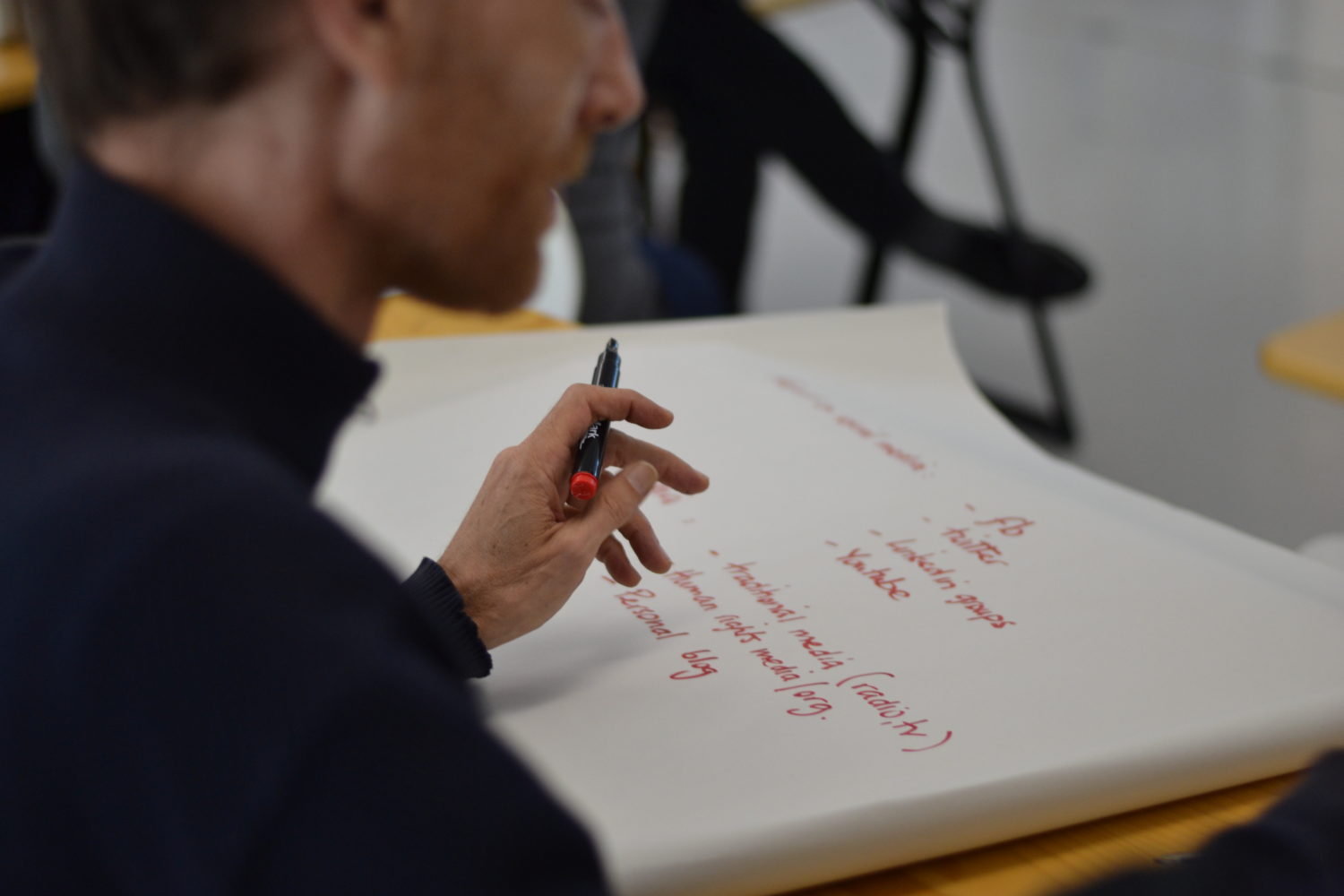

Steen Bentall, Head of The Hague Hacks, writes down points discussed during the brainstorming session.

After the simulation we took a short lunch break before we continued with the second part of the day. We split up into two groups to address the question ‘How do we engage tech companies?’. The morning had helped to create a basic understanding of the importance of tech to human rights, and so we built upon this idea during our brainstorming sessions.

We were very happy to see how motivated everyone was to discuss possible solutions. Both of the groups came up with some insightful practical steps and focus points on how to establish and maintain contact with tech companies. The knowledge we acquired from the brainstorm sessions is incredibly valuable. We will incorporate some of the findings into future collaborations with tech companies to ensure support for human rights defenders long-term.

This lab was another example of how important it is for people from different disciplines to come together and talk about tech and human rights. It helped us focus on possible ways of involving tech companies. Our Nextview event also had some great results. We will soon update you on that event too!

If you are interested in The Hague Hacks, or if you would like to learn more, collaborate, volunteer, or just want to drop a note, we’re happy to hear from you. Send us an email at haguehacks@thehaguepeace.org

References

[1] Online Harassment 2017. (2017). Pew Research Center.

[2] Toxic Twitter – A Toxic Place For Women. (n.d.). Amnesty International.

Tags: Border Sessions, Human Rights, peace, technology, The Hague Hacks, the hague peace projects